Data preparation processes (ETL) frequently require workflow capabilities to conditionally trigger other processes and send notifications (email, text, etc.) when a task has been completed. Companies often run processes and reports based on assumed completion times (successful or not). It causes havoc when hiccups occur and reports or processes run without anybody being notified. Pyramid’s Master Flow capabilities provide advanced users the flexibility to perform complex workflow, data cleansing, and manipulation tasks using multiple data flows and data models.

The problem

Batch task processing times can vary greatly depending on a host of reasons, causing uncertainty about expected completion times. When tasks complete after assumed times, the entire workflow is disturbed, often resulting in chaos.

Third-party BI tools offer extremely basic ETL capability, with no pipeline processing of data or workflow capabilities and no ability to run multiple or conditional flows—resulting in daily batch jobs being run unnecessarily. Without the ability to pass variables to subsequent tasks, control of the workflow process is severely hampered, limiting or preventing essential time-saving actions like incremental updates (see blog 40). Further, most BI tools cannot send alerts and notifications to multiple recipients when batch jobs run (successfully or not), leaving users who depend on up-to-date data in the dark.

Pyramid’s solution

Pyramid’s Master Flow feature (found within the Model app) gives users the ability to construct multiple data flows using multiple data models with fully customized conditions and success factors. Data flows can be configured with one or multiple conditions using either simple point-and-click methodology or Pyramid’s comprehensive PQL code for advanced users.

Users can set and transfer variables to control and expedite data flows. They can invoke SQL scripts, Rest APIs, and command line execution processes at any point in the data flow using variables. In addition, Pyramid provides extensive looping capability, using both the “for” and “while” loop constructs, capable of executing all processes. Email and text notifications can be sent using dynamic conditional criteria, and message content can be built using expressions and PQL code. Lastly, users can configure Pyramid events to schedule and distribute publications or execute master flows or data models.

Business case

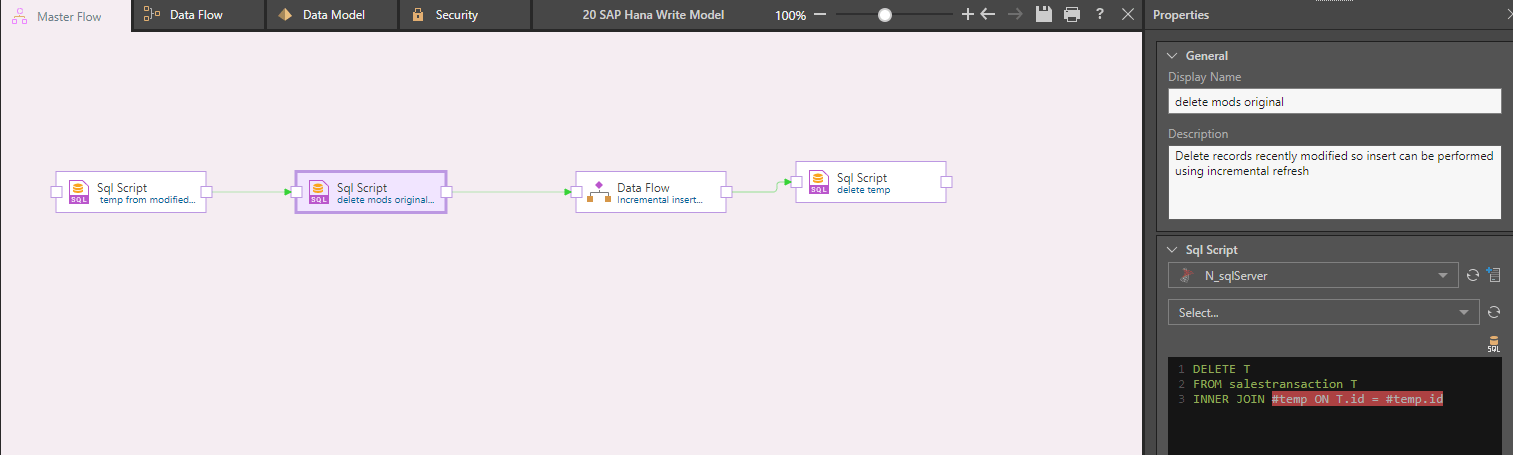

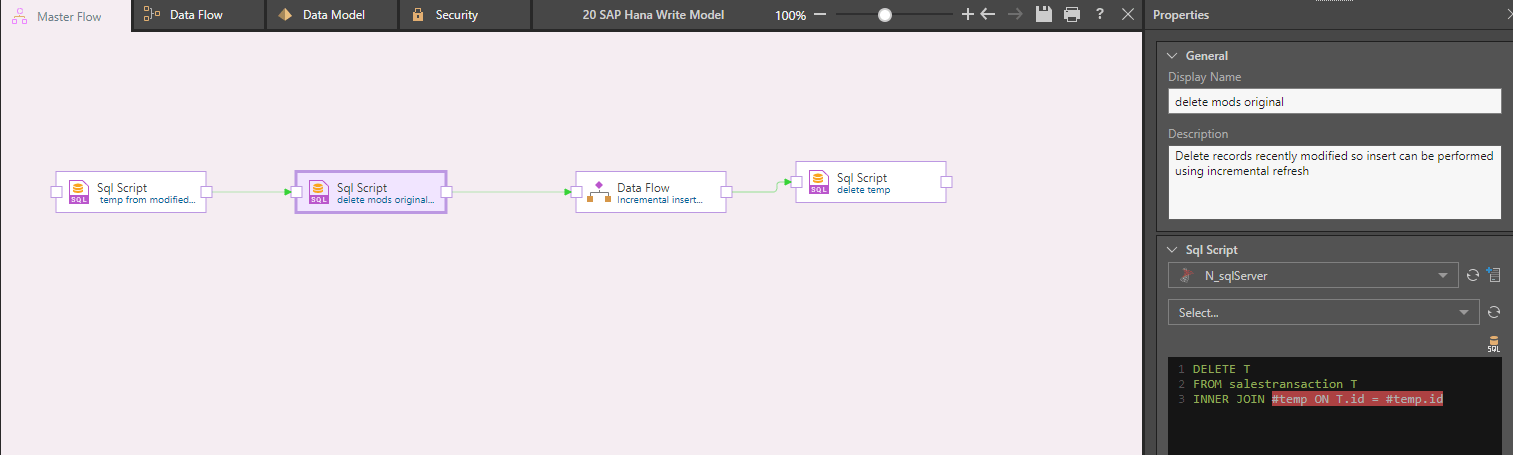

Lee is a BI Analyst for ACE Industries and uses the Master Flow capabilities to solve several everyday challenges. ACE uses Pyramid to analyze their Oracle database that holds a five-year history of transactional data with over 200 million rows (see blog on incremental data flows). ACE have a legacy sales system that defies accepted audit practices and allows historical records to be modified, without changing the date timestamp. To design a solution that inserts a date timestamp without overwriting or duplicating transactional data, Lee has written SQL code to identify records changed using the HASH function and write them to a temporary file. A second SQL function deletes all modified records on the original table so she can perform an efficient insert instead of an update on the table. She then creates a single master flow that first calls the SQL functions, then calls the incremental update using the temp file to insert all modified records. The flow ends by calling SQL code to delete and drop the temp file.

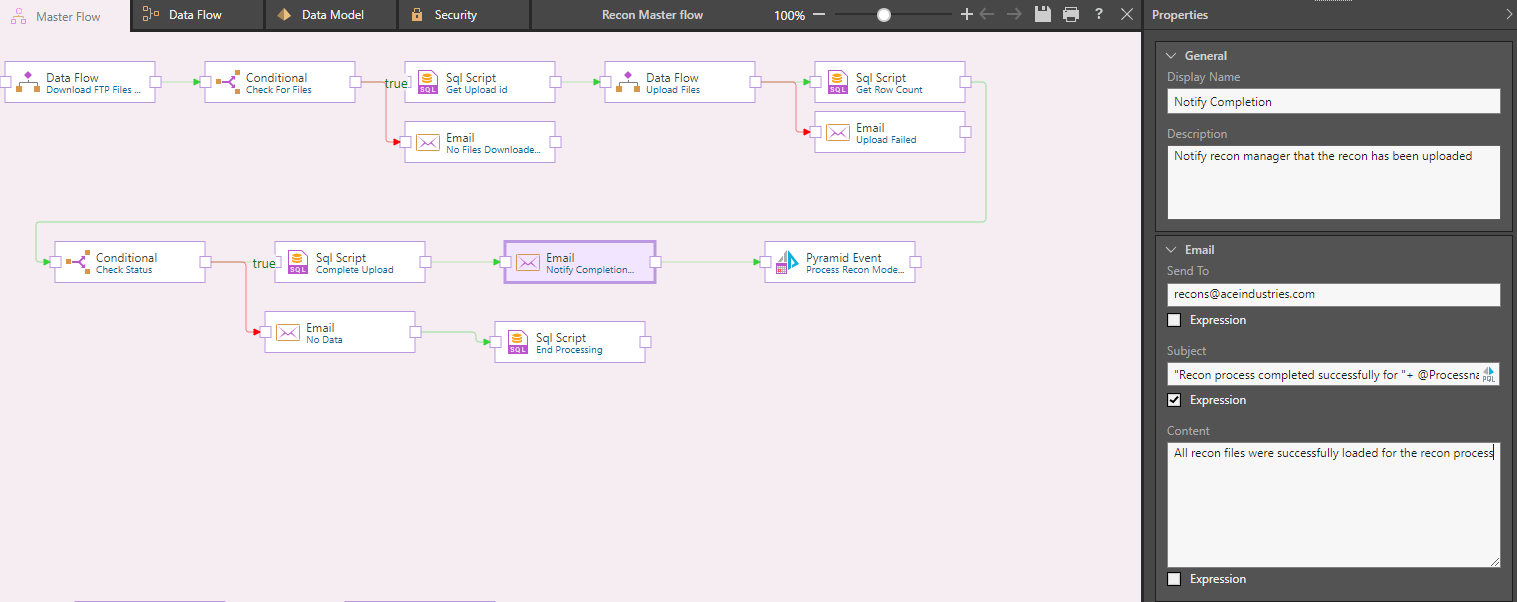

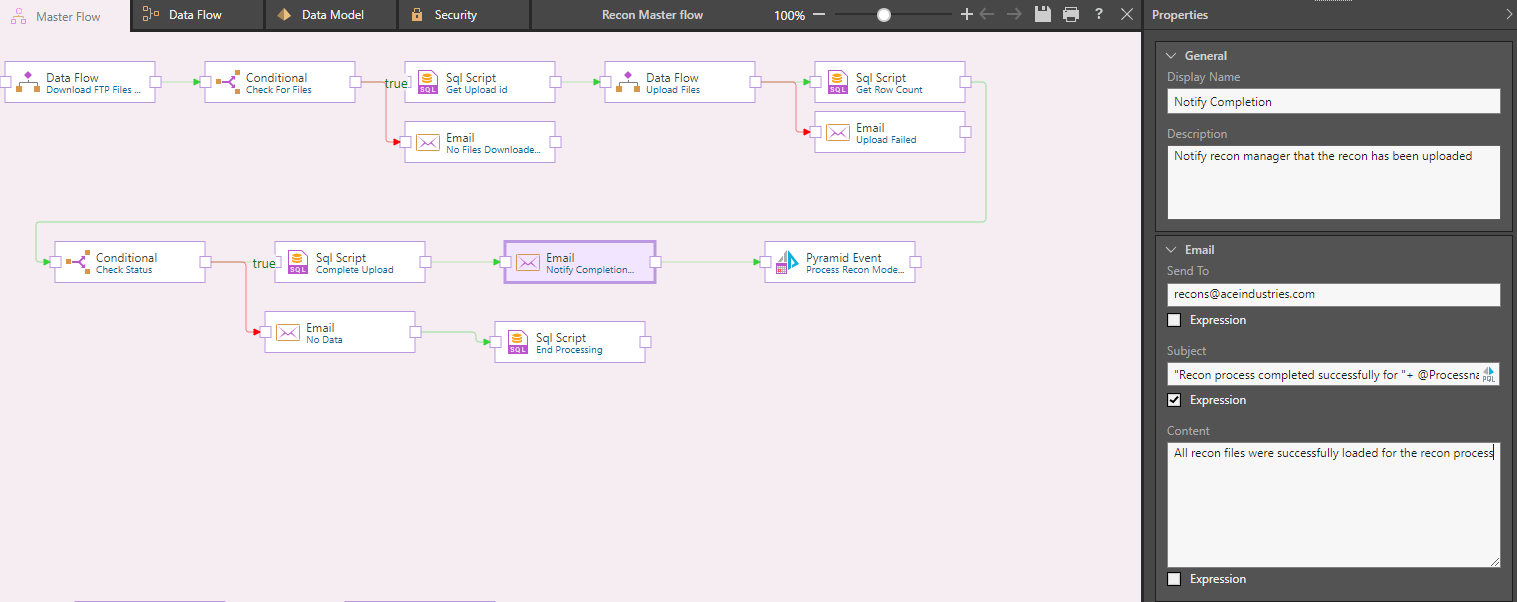

Let’s look at another example. Lee has several data files from retail companies selling ACE’s electronic products (sim cards and data packages) on their behalf to the retailers’ own customers, resulting in reverse billing from the retailers. For billing reconciliation purposes, ACE loads the data files onto their system and performs an automatic daily reconciliation against their own records. As the files don’t always arrive on time, and sometimes arrive empty, Lee has constructed a conditional master flow to manage the entire process, including the sending of notifications.

Lee executes a data flow to download FTP files from ACE’s FTP server. She then checks if the files are there. If successful, she kicks off the file upload process. If unsuccessful, an email is sent to the administrator to follow up. After uploading the files, if successful, SQL is run to determine the number of records loaded. If the upload was unsuccessful, an email is sent to the support staff. A record count check is then made—if data exists, a SQL script is run to complete the upload process, if no records exist, the support staff are notified via email. Variables are used throughout the data flow using expressions to build content. After the upload has completed, an email notification is sent out and a Pyramid event is executed to process the reconciliation model.

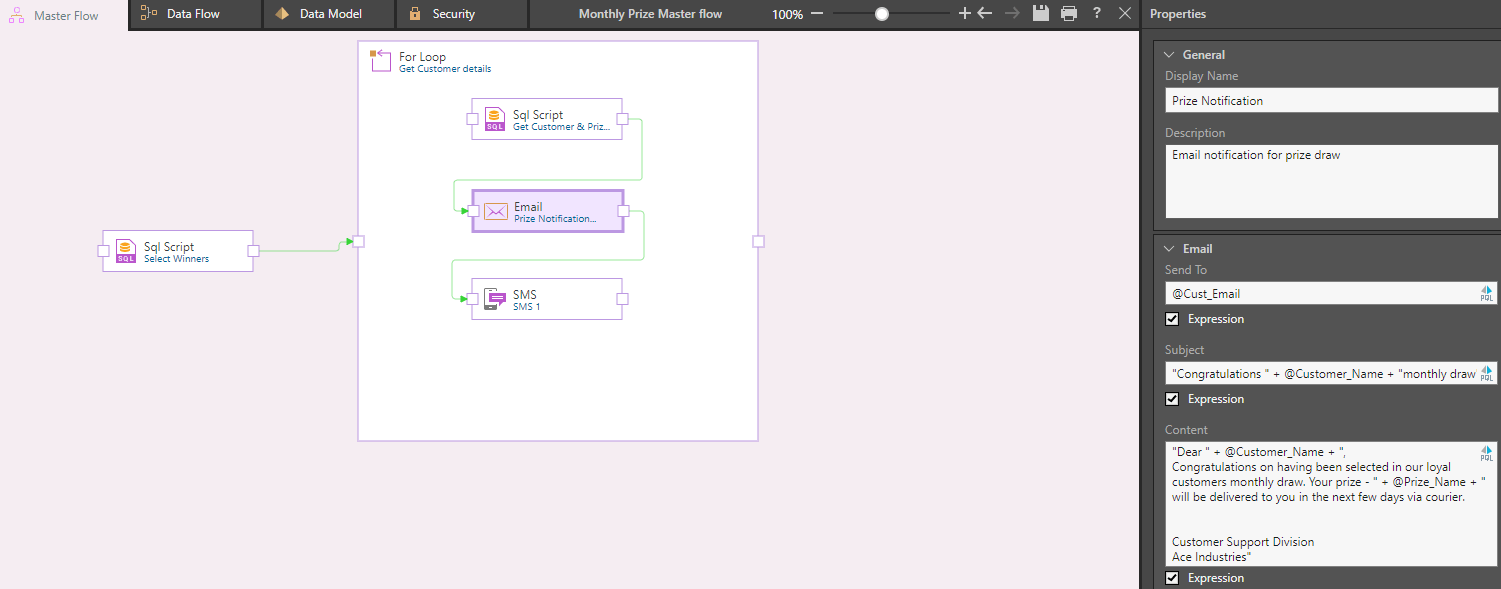

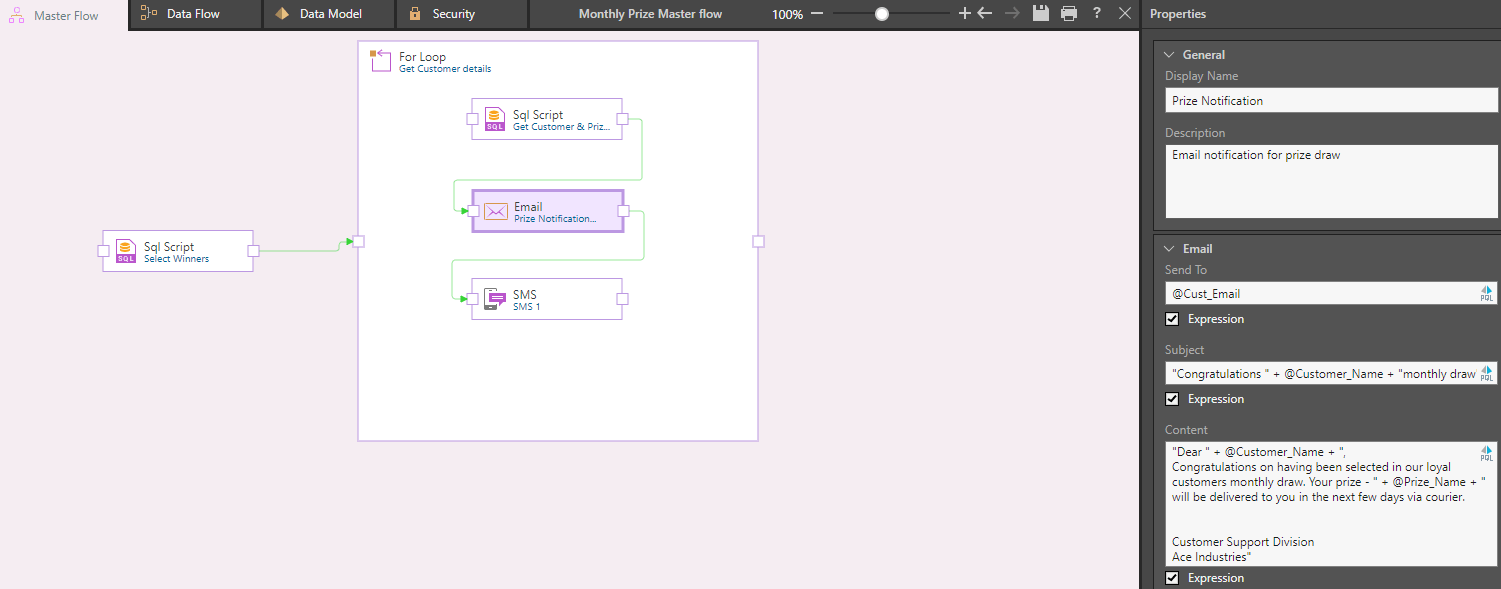

Now that she’s resolved the date timestamp issues and set up notifications on a critical reconciliation process, Lee uses the master data flows to address a different issue. ACE have a monthly draw for their active customers. A random process selects winners for surprise gifts. Lee has created a custom master flow to manage the notification of the prizewinners. She runs a SQL script that randomly selects 50 customers and allocates prizes. A “for” loop is then activated that runs a SQL script to fetch the customer email, phone, and prize details. Then notifications run, notifying the customers about their prize via both email and SMS. The email and SMS messages are both constructed using Pyramid’s expressions and variables, set using the SQL scripts.

Summary

ETL processes require workflow capabilities to conditionally trigger other ETL processes and notifications when a task has completed. By relying on assumed completion times, tasks are often not run, resulting in dashboards and reports not being populated. Other BI tools lack considerable incremental refresh functionality by not providing support for injection of variables into customizable SQL, Python, and R scripts. Without powerful looping and conditional workflow tools, they can’t perform notifications and alerts for common business scenarios.

Pyramid’s Master Flow provides advanced users the flexibility to perform complex workflow, data cleansing and manipulation using multiple data flows and data models. Conditional flows and looping functions to execute process provide powerful workflow and notification control features. The execution of REST APIs, command line processes, and SQL, R, and Python scripts using variables allow for flexible process control. Email and text notifications and message content can be built using expressions and PQL code. Lastly, Pyramid events can be configured to schedule and distribute publications or execute master flows or data models.